|

Navigation

Search

|

Why data contracts need Apache Kafka and Apache Flink

Tuesday December 2, 2025. 10:00 AM , from InfoWorld

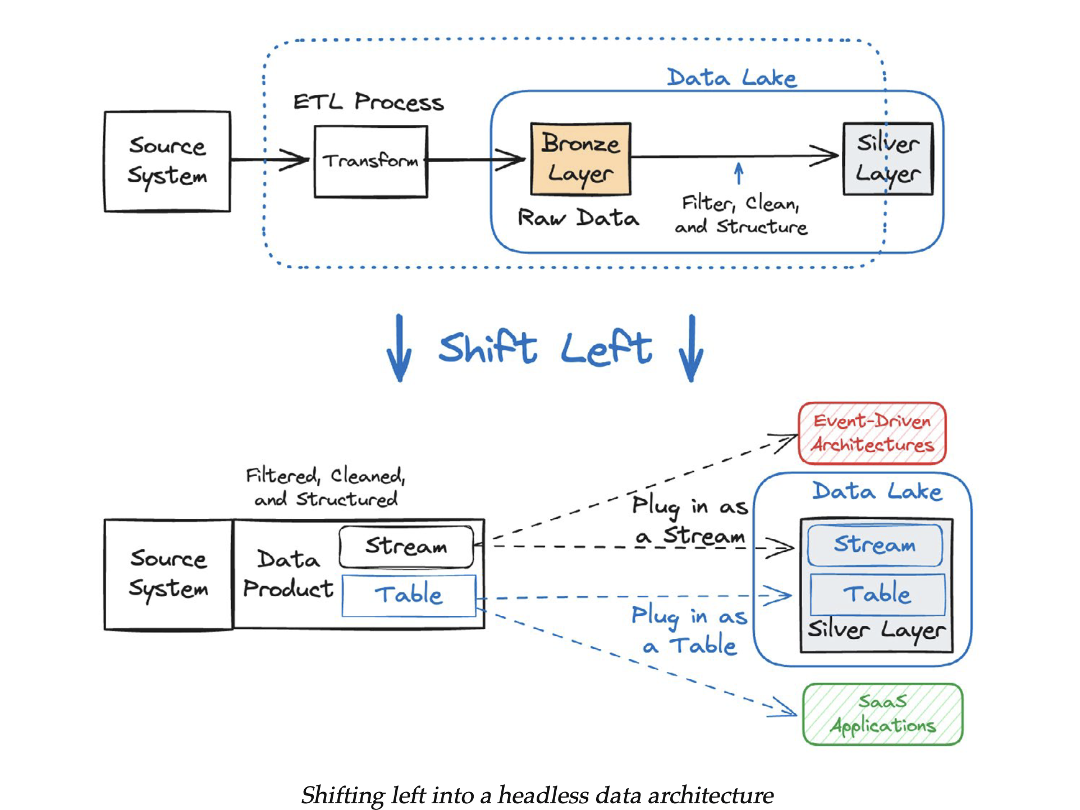

This is the nature of many modern data pipelines. We’ve mastered the art of building distributed systems, but we’ve neglected a critical part of the system: the agreement on the data itself. This is where data contracts come in, and how they fail without the right tools to enforce them. The importance of data contracts Data pipelines are a popular tool for sharing data from different producers (databases, applications, logs, microservices, etc.) to consumers to drive event-driven applications or enable further processing and analytics. These pipelines have often been developed in an ad hoc manner, without a formal specification for the data being produced and without direct input from the consumer on what data they expect. As a result, it’s not uncommon for upstream producers to introduce ad hoc changes consumers don’t expect and can’t process. The result? Operational downtime and expensive, time-consuming debugging to find the root cause. Data contracts were developed to prevent this. Data contract design requires data producers and consumers to collaborate early in the software design life cycle to define and refine requirements. Explicitly defining and documenting requirements early on simplifies pipeline design and reduces or removes errors in consumers caused by data changes not defined in the contract. Data contracts are an agreement between data producers and consumers that define schemas, data types, and data quality constraints for data shared between them. Data pipelines leverage distributed software to map the flow of data and its transformation from producers to consumers. Data contracts are foundational to properly designed and well behaved data pipelines. Why we need data contracts Why should data contracts matter to developers and the business? First, data contracts reduce operational costs by eliminating unexpected upstream data changes that cause operational downtime. Second, they reduce developer time spent on debugging and break-fixing errors. These errors are caused downstream from changes the developer introduced without understanding their effects on consumers. Data contracts provide this understanding. Third, formal data contracts aid the development of well-defined, reusable data products that multiple consumers can leverage for analytics and applications. The consumer and producer can leverage the data contract to define schema and other changes before the producer implements them. The data contract should specify a cutover process, so consumers can migrate to the new schema and its associated contract without disruption. Three important data contract requirements Data contracts have garnered much interest recently, as enterprises realize the benefits of shifting their focus upstream to where data is produced when building operational products that are data-driven. This process is often called “shift left.” Confluent In a shift-left data pipeline design, downstream consumers can share their data product requirements with upstream data producers. These requirements can then be distilled and codified into the data contract. Data contract adoption requires three key capabilities: Specification — define the data contract Implementation — implement the data contract in the data pipeline Enforcement — enforce the data contract in real-time There are a variety of technologies that can support these capabilities. However, Apache Kafka and Apache Flink are among the best technologies for this purpose. Apache Kafka and Apache Flink for data contracts Apache Kafka and Apache Flink are popular technologies for building data pipelines and data contracts due to their scalability, wide availability, and low latency. They provide shared storage infrastructure between producers and consumers. In addition, Kafka allows producers to communicate the schemas, data types, and (implicitly) the serialization format to consumers. This shared information also allows Flink to transform data as it travels between the producer and consumer. Apache Kafka is a distributed event streaming platform that provides high-throughput, fault-tolerance, and scalability for shared data pipelines. It functions as a distributed log enabling producers to publish data to topics that consumers can asynchronously subscribe to. In Kafka, topics have schemas, defined data types, and data quality rules. Kafka can store and process streams of records (events) in a reliable and distributed manner. Kafka is widely used for building data pipelines, streaming analytics, and event-driven architectures. Apache Flink is a distributed stream processing framework designed for high-performance, scalable, and fault-tolerant processing of real-time and batch data. Flink excels at handling large-scale data streams with low latency and high throughput, making it a popular choice for real-time analytics, event-driven applications, and data processing pipelines. Flink often integrates with Kafka, using Kafka as a source or sink for streaming data. Kafka handles the ingestion and storage of event streams, while Flink processes those streams for analytics or transformations. For example, a Flink job might read events from a Kafka topic, perform aggregations, and write results back to another Kafka topic or a database. Kafka supports schema versioning and can support multiple different versions of the same data contract as it evolves over time. Kafka can keep the old version running with the new version, so new clients can leverage the new schema while existing clients are using the old schema. Mechanisms like Flink’s support for materialized views help accomplish this. How Kafka and Flink help implement data contracts Kafka and Flink are a great way to build data contracts that meet the three requirements outlined earlier—specification, implementation, and enforcement. As open-source technologies, they play well with other data pipeline components that are often built using open source software or standards. This creates a common language and infrastructure around which data contracts can be specified, implemented, and enforced. Flink can help enforce data contracts and evolve them as needed by producers and consumers, in some cases without modifying producer code. Kafka provides a common, ubiquitous language that supports specification while making implementation practical. Kafka and Flink encourage reuse of the carefully crafted data products specified by data contracts. Kafka is a data storage and sharing technology that makes it easy to enable additional consumers and their pipelines to use the same data product. This is a powerful form of software reuse. Kafka and Flink can transform and shape data from one contract into a form that meets the requirements of another contract, all within the same shared infrastructure. You can deploy and manage Kafka yourself, or leverage a Kafka cloud service and let others manage it for you. Any data producer or consumer can be supported by Kafka, unlike strictly commercial products that have limits on the supported producers and consumers. You could get enforcement via a single database if all the data managed by your contracts sits in that database. But applications today are often built using data from many sources. For example, data streaming applications often have multiple data producers streaming data to multiple consumers. Data contracts must be enforced across these different databases, APIs, and applications. You can specify a data contract at the producer end, collaborating with the producer to get the data in the form you need. But enforcement at the producer end is intrusive and complex. Each data producer has its own authentication and security mechanisms. The data contract architecture would need to be adapted to each producer. Every new producer added to the architecture would have to be accommodated. In addition, small changes to schema, metadata, and security happen continuously. With Kafka, these changes can be managed in one place. Kafka sits between producers and consumers. With Kafka Schema Registry, producers and consumers have a way of communicating what is expected by their data contract. Because topics are re-usable, the data contract may be re-usable directly or it could be incrementally modified and then re-used. Data contract enforcement in Kafka. Confluent Kafka also provides shared, standardized security and data infrastructure for all data producers. Schemas can be designed, managed, and enforced at Kafka’s edge, in cooperation with the data producer. Disruptive changes to the data contract can be detected and enforced there. Data contract implementation needs to be simple and built into existing tools, including continuous integration and continuous delivery (CI/CD). Kafka’s ubiquity, open source nature, scalability, and data re-usability make it the de facto standard for providing re-usable data products with data contracts. Best practices for developers building data contracts As a data engineer or developer, data contracts can help you deliver better software and user experiences at a lower cost. Here are a few guidelines for best practices as you start leveraging data contracts for your pipelines and data products. Standardize schema formats: Use Avro or Protobuf for Kafka due to their strong typing and compatibility features. JSON Schema is a suitable alternative but less efficient. Automate validation: Use CI/CD pipelines to validate schema changes against compatibility rules before deployment. Make sure your code for configuring, initializing, and changing Kafka topic schemas is part of your CI/CD workflows and check-ins. Version incrementally: Use semantic versioning (e.g., v1.0.0, v1.1.0) for schemas and document changes. This should be part of your CI/CD workflows and run-time checks for compatibility. Monitor and alert: Set up alerts for schema and type violations or data quality issues in Kafka topics or Flink jobs. Collaborate across teams: Ensure producers and consumers (e.g., different teams’ Flink jobs) agree on the contract up front to avoid mismatches. Leverage collaboration tools (preferably graphical) that allow developers, business analysts, and data engineers to jointly define, refine, and evolve the contract specifications. Test schema evolution: Simulate schema changes in a staging environment to verify compatibility with Kafka topics and Flink jobs. You can find out more on how to develop data contracts with Kafka here. Key capabilities for data contracts Kafka and Flink provide a common language to define schemas, data types, and data quality rules. This common language is shared and understood by developers. It can be independent of the particular data producer or consumer. Kafka and Flink have critical capabilities to make data contracts practical and widespread in your organization: Broad support for potential data producers and consumers Widespread adoption, usage, and understanding, partly due to their open source origins Many implementations available, including on-prem, cloud-native, and BYOC (Bring Your Own Cloud) The ability to operate at both small and large scales Mechanisms to modify data contracts and their schemas as they evolve Sophisticated mechanisms for evolving schemas and reusing data contracts when joining multiple streams, each with its own data contract. Data contracts require a new culture and mindset that encourage data producers to collaborate with data consumers. Consumers need to design and describe their schema and other data pipeline requirements in collaboration with producers, and guided by developers and data architects. Kafka and Flink make it much easier to specify, implement, and enforce the data contracts your collaborative producers and consumers develop. Use them to get your data pipelines up and running faster, operating more efficiently, without downtime, while delivering more value to the business. — New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.

https://www.infoworld.com/article/4086004/why-data-contracts-need-apache-kafka-and-apache-flink.html

Related News |

25 sources

Current Date

Dec, Sun 28 - 19:58 CET

|

MacMusic |

PcMusic |

440 Software |

440 Forums |

440TV |

Zicos

MacMusic |

PcMusic |

440 Software |

440 Forums |

440TV |

Zicos